Rules as Code (RaC) is a trend in which official law and regulations are interpreted or defined and published in a machine-consumable form. The idea is to make the operationalization of services more feasible in compliance with laws and regulations by design (Compliance by Design) that maintains a delicate balance in important values that feed into trust. Services like granting people a certain benefit or just giving people a possibly personalized advice on services alone or the combination of all services like in so called Personal Regulation Assistant’s (PRA’s)[1]. But also, services for experts and decision makers within governments[2]. Within the GovTech4All program, we wrote about this in early 2024[3]. In a nutshell, we concluded that there are several existing methods in use that define how to do certain distinguishable steps in applying RaC. Still, none of them seemed to encompass them all well enough, requiring more standardization on interoperability.

Applying RaC is equivalent to using rules based systems. It is a form of model based AI or symbolic AI, a long existing form of what also is classified as AI and that is knowledge driven in contrast with the current hype of Machine Learning like Deep Learning and genAI using Large Language Models (LLM’s) as ChatGPT that are data driven. These data driven approaches may seem more workable despite the ever increasing processing power required. Yet they generally lack reliability, explainability, and other important values because as recently has been published by Apple researchers[4]: current LLM’s only memorize word associations in vast amounts of input data and as such the reasoning steps in them. But they are incapable of doing genuine reasoning and to assess LLM’s on this matter a certain Symbolic AI benchmark is suggested to be used. Like any form of AI, RaC requires quality knowledge to be represented in a machine consumable form to have a reasoner search and find solutions in which a given set of knowledge logically holds as truth efficiently or can explain why not. This kind of digitization of knowledge has been done in many levels concurrently[5] for a long time and is much about the research field of knowledge representation and reasoning (KRR).

In the current world, it is generally expected by people that services are accessible through internet channels and also automated as much as possible in a way there are a minimalized number of incidents while people make use of it adding value as much as possible in an effortless way. It probably is not feasible for organizations responsible for the operationalization of services not to use some form of RaC to some extent. It already seems to have been widely used within organizations for quite some time, but across organizational boundaries not so much.

So still in practice, no widely adopted free open standard for making public knowledge like laws, policies, and standards interoperable has been adopted so that the knowledge gets shared openly for direct machine consumable reuse by others. This results in many copies of the same knowledge represented in different ways, even in standardization itself. For instance, within all ISO norms, there are over 100 definitions of the concept “service”[6]. But more importantly, there might be many possibly slightly different implementations of the same small piece of law into more formalized rules where this should actually not be the case. This is because different teams of experts might improve (interpret) knowledge differently within digitalization even where this is intended not to be context dependent by design. It is also much more efficient to reuse official formalized knowledge from a single governable source to operationalize (public) services with. Publishing such knowledge publicly feeds into feasibility, transparency and trust. This article will talk about this later on. But where knowledge is published for reuse from a governed single source essentially to be held as the official truth, and public services work accordingly, it is essential to see this knowledge as being agreements between all the people in society that have some stake in it. Just executing rules as code as the operationalization of public services will result in disaster. Let us explain why.

Rules as Agreements

The thing with rules is that they are agreements in the context of a (public) service, just like the legislation is of which they are a more deterministic formalization of. Legislation describes a set of agreements, but in the context of the automation of the operationalization of services, any relevant knowledge might have to be represented more formally within rules. Law, policies, standards, enterprise architecture, contracts but also a decision to grant a person a benefit, a decision a person is known to have a certain last name, so personal data in general or a verifiable version thereof in the form of issued Qualified Electronic Attestation of Attributes (QEAA’s), or even a single-sided decision of someone to accept a decision: these are all agreements on knowledge. And in the end, agreements are decisions to be made by, or at least to get accepted by all people affected to gain and keep their trust.

You cannot automate decision making to uphold important values like fairness[7], inclusiveness, accessibility[8], explainability, and therefore also transparency[9] without the possibility of interaction with all the people who actually need to agree at any time. There just might be no known universal truth to be able to decide on these things when not taking all people’s experiences into account perpetually where necessary, and you can never be entirely sure to know a universal truth. While this line of thought in the context of fake news and post-truth currently often is attributed to populism as an escape to get into power[10] and as such with the connotation it is a bad thing for people to question what is generally seen as truth when their gut says they can’t accept it, thereby judging them to be dumb or worse, still in operationalizing public services for all people within society this should not be a reason not to take every person seriously in a respectful way, not to be judging and just take all people’s experiences into account and engage with the conflict. Likewise, local experts often still have a lot of tacit knowledge for which it has not been feasible (yet), or desired, to get represented and shared as knowledge. Legislation often leaves room for this tacit knowledge and as such rules might need to do also.

Even if rules would be deemed 100% “truthful” in general by almost all people and shared with all experts in all organizations that operationalize services in which these rules are used, it still requires structurally being able to involve the experience of citizens as well as these experts affected by such rules effectively in the incident, change and risk management (quality assurance) of services especially when they were not a direct part of the decision making processes that resulted in these rules[11]. Taking this into account by design, perpetually engaging with as many people that feel a need to as possible in a respectful and non judging way to come to agreement on what they can live with as being held as truth, is what feeds into trust, more than a focus on privacy in data sharing alone. Also, and not coincidentally EU laws like the GDPR, AI Act increasingly require governments to take this into account to some extent as has also been noted by Fraser et. al.[12].

For some services, people might not care about its rules. Decisions like granting a new parking permit may of course get automatically decided but only as long as the citizen requesting it agrees with the decision and has accessible alternative human channels for the service that adds value in the form of the decision at any time. It can also be assured that the automated decision is not going to get retracted afterwards unless agreed on. This integrates with a support for other cases, in which it is more likely decisions affect people’s lives or work negatively even if it is only they experience it as such, it might be better to refrain from automated decision making from the start, and instead automate services and tooling that support the decision making processes with these people (both citizens as local experts), within a context of all known and relevant agreements, including the support for informing and explaining people about all information that is known in a consumable way for these people. This is not a straightforward thing to do though. Especially as knowledge is not publicly shared enough for this, and often also not in an interoperable way. And as it easily becomes an overwhelming big pile of spaghetti making things that should be kept easy, experienced as being too complex even for experts. Explaining public services is not just about summarizing rules that apply, but also why these exist with regard to the personal needs of people and how they came to be decided on. In practice, a lot of this information is kept hidden or gets lost. And if not, it is not shared in a way it is easy to be reused by others as this information is knowledge too that at some time has been agreed upon within a decision making process and that will need explaining too for acceptance and so on.

So RaC is all about open standardization of knowledge representation and reasoning (KRR) around decision making concerning (public) services to get operationalized in interaction with people affected by the decision making. Services on which people need to be informed, know about its existence and be able to participate in and/or get assistance in order to agree with or at least accept all relevant decisions relating to just five main decision making processes that need to be defined for a service to get operationalized properly. These processes decide on: the definition of the service, the decisions that form the added value the service adds as a result from its operation as defined in its definition, how incidents are managed, how changes are managed and how risks are managed perpetually looking into improvement of the service as an incident prevention measure. Luckily, these 5 processes are the only ones that a service actually needs to implement[13]. These mostly require interaction with people, in essence, to get more knowledge and therefore can only be automated in a sociotechnical way only to a certain extent. This is not something you can place out of the scope of applying RaC because all these decisions matter as knowledge within the context of the knowledge to get represented in the form of rules. This is why RaC can never really be automated entirely too. It needs to be part of the operationalization of services and embedded within. Of course, this does not mean operationalization would not benefit from applying RaC at all as it is being done already in production at many places locally to a certain extent.

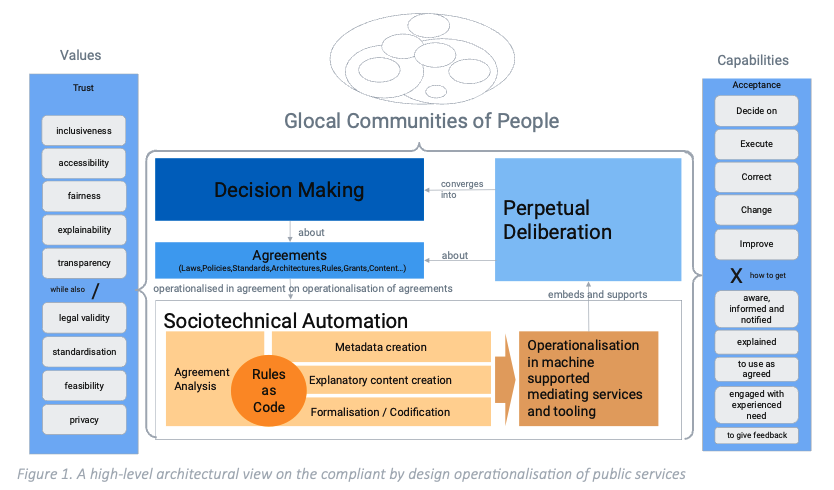

This brings us to an improved high level overview of what Rules as Code actually encompasses within the context of society as presented in the next section in Figure 1.

RaC within the context of (public) services

Any community consists of people who belong to many other communities, whether these are local, like municipalities, or online and global. These communities typically need to agree on things to have their needs engaged in a way everyone can live with and in compliance with all agreements that already apply within these communities or communities that include them (municipalities as part of a nation, for instance).

Perpetual deliberation about a current world, with all the existing agreements, operationalized in the way they are, together with people’s experiences and needs, need to converge into efficient and effective decision making while also upholding important values as not to lose trust from the people that need to accept. This also means the decision making processes to come into agreement are perpetual. All this is nothing new. But it would be better to embed the support for perpetual deliberation and decision making into the services that operationalize the agreements. As rules are agreements, this also applies to rule making. It cannot only be focused on the capability of getting people to make use of agreements through the services that operationalize them. It cannot be seen as separate from capabilities that make people aware, informed, and notified of the existence of these agreements, have them explained in a personalized way, and make people experience being part of a community that is engaged with their needs relating to the agreement, as they experience it, and in general people being capable of giving feedback for the purpose of perpetual improvement. And for all these capabilities, agreements need to be made in a similar way on how these capabilities are to be perpetually decided upon, executed, corrected, changed and improved too.

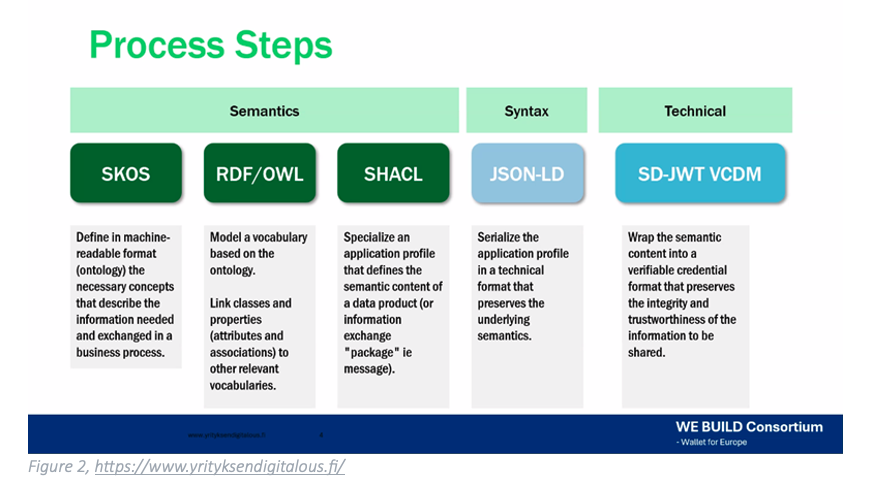

This requires sharing non personal knowledge as linked data through linked data stores, consisting of triplets in the form of RDF or Turtle referencing concepts by using URIs to represent knowledge. And then there are standards like SHACL, JSON-LD, OWL, and so on like can be seen explained in Figure 2. This also hooks into verifiable credential standards. However, these standards have been evolving and adopted slowly for many years. And there are various “standards” that grew on top of these, in all kinds of variations, making them seemingly a steep learning curve. However, there has been a particular group of standards for the interoperability of rules for many years, called Rule Interchange Format (RIF) which is a W3 recommendation as of 2010. It also has numerous extensions for all kinds of model-based AI. Yet, there is not much current information about these standards shared publicly. There is a lot of focus on data sharing, but rules are not shared, apparently. Because if you've invested in making them and it is not actually clear how to do it in an interoperable way and for whom, why would you put effort into this? Where organizations compete in the private sector, refraining from investing in this might make sense to some, but within the public sector, it just doesn’t. Also, when you want your systems automated and also be explainable and transparent, which will get increasingly more important, you will have to openly publish knowledge represented in interoperable forms of rules.

Relatively recently, the EU published standards on top of all this in relation to the description of public services called the Core Public Service Vocabulary - Application Profile (CPSV-AP) and a Core Criterion and Core Evidence Vocabulary (CCCEV) standard for describing criterion and evidence information relating to Qualified Electronic Attestation of Attributes (QEAA’s which can be seen as verifiable credentials) to be issued to European Digital Identity (EDI) wallets of people that can use them to have their identity and other personal information handed over and verified by other organizations in compliance with the EiDAS 2.0 act. Currently, all this is still in development, though it is highly likely going to be adopted across the member states in the EU in the future. The CCCEV standard already refers to usage of the RIF standard to describe criteria relating to personal information in QEAA’s. This shows a path towards client side PRA services embedded or working together with EuDI Wallets, which can operate on this. Personal data then does not have to be shared at all to get informed, notified or personally advised. Also this enables more accessibility and inclusivity as people can gather their verifiable information and share it along with a request for a decision[14] in the context of a service with the appropriate organization in an almost effortless way, while offering full transparency and enabling access to other channels for personalized human support and incident, change and risk management. This kind of client side (on the edge) PRA services is exactly what the Dutch PRA project also related to govetech4all project experimented with using a basic prototype of a EuDI wallet, while not yet incorporating the mentioned standards.

With the use of governed Self Sovereign Identity Wallets whether these are future EuDI wallets, some sort of pods or vaults, or for the time being even legacy services to which GDPR data portability services have been added: rules can make data become information, by providing the context that defines the semantics of the data in a traceable way, potentially all the way to all specific parts of any agreement that directly or indirectly impacts the semantics of the data. EU dataspaces can then be formed around agreeing on the rules of public services that are provided in the dataspace domain. Or even to agreements on why these services were identified as needed. After all, these current or future services form the purpose of a dataspace rather than sharing data.

So currently, evolving standards like the CPSV-AP, CCCEV, and ELI seem useful to get to play an important role in the automation of getting all this supported in an automated way by tooling and machines that mediate as part of a socio-technical system in which public services are operationalized and they need to support. Public services need to be human literate instead of requiring people to be digital literate. This also applies to RaC methods and tooling where they are a part of it.

A small example

Let us look at a small example to illustrate all this more concretely. In the Netherlands, there is the National Old Age Pensions Act in which article 7a specifies how to determine the exact date when a citizen is eligible to receive his old age pension. This piece of information is heavily relied upon in other legislation, already being referenced at least 100 times[15] at all kinds of levels within legislation and policies, so it is quite important in practice.

Typically, this information could state that the date a person reaches a certain fixed age is the date of being eligible. In the Netherlands, the definition for this particular age has been made more complex to count in the average life expectancy of people in the Netherlands, though as it has been agreed upon, it can only go up by only a few months, but not down.

This National Old Age Pensions Act is governed under the authority of the Ministry of Social Affairs, but the social security bank (SVB) is the organization appointed to operationalize it even as an online test tool. A very tiny, limited PRA, though a PRA would probably integrate many if not all agreements within a single service channel. This tool can be found on a Dutch national governmental portal[16] and also can be found through the YourEurope[17] service directory. But it is a webform to be used by humans and uses an algorithm in a black box. How the agreement expressed in article 7a of the National Old Age Pensions Act has been analyzed, formalized, and codified into the operationalized algorithm within this specific tiny online PRA kind of tool is nowhere to be found which makes deliberation about this very inefficient. In this case, hopefully, there still are experts who know, and you might say you could figure this out yourselves. But trying to model this tiny piece of agreement into knowledge as agreed by all people using several different methods for rules as code by different experts seems to indicate otherwise[18].

Note that for the 100+ places this piece of information is being referenced, a lot of systems probably reimplemented the same algorithm which introduces a risk of noncompliance even though the agreement does not leave much room for differences in interpretation and is just not designed to be context dependent. And where there is such room, for such an agreement obviously intended to be mathematically precise, it is essential to additionally agree on policies that formalize interpretation and that make it deterministic and evaluated using test cases (unit and integration testing for RaC). But even with rigorous testing and deliberation in a design phase, there will probably be incidents that need correction and change for improvement later on. And sometimes it must be concluded that agreements just cannot be formalized in a calculable way and also be ethical in every case. It then can only make clear what to do with this bias in practice, often also relying on the tacit knowledge of experts allowed to make decisions more locally by design, considering the experience of the affected citizen. And otherwise, these experts will be the ones confronted with the errors of non-compliance, hopefully, to be corrected by them instead of ignored.

Where to go from here

RaC is part of a socio-technical system. In this system, people take part in many decision making processes. Of course, often only in a very indirect way and often unconsciously. But where and when they are affected, to gain the trust of people, they need to be offered a way to take part in the relevant decision making processes. To engage people in such mechanisms they need to be accessible, safe, relevant, credible and responsive[19]. People should be at least informed in such an honest way, in their own language making sense in their own life experience, that they understand enough to trust the agreements being made. In a way they experience it as being made with them (power-with) instead of for them (power-over).

This is not an easy thing to do as when you think you can persuade, nudge or force people into agreement and as such actually being manipulative in a non loving way whether this is done consciously or not, at first it might seem to work but in the end it can be very detrimental to people having trust. Trust is not the first main value identified in the NORA[20] by coincidence. When building on RaC, without taking all of this into account, is a recipe for disaster. But the other way around, not improving the support for and the efficiency of decision making and knowledge sharing and transparency within society by governments actually might also result in something worse though less visible. And this is where Europe can distinguish itself from other regions even further like China and the United States that seemingly need to do more in a value driven and trust building way by design. Europe is not there yet either and is staying behind economically and sometimes this has been attributed because of our restricting laws focused on privacy as a value and the values around the usage of AI and automated decision making, however which if done right, upholding social inclusion within collaborative innovation on rules for decision making rules processes, is to make Europe more a safe and sound place to live in than elsewhere[21].

So, what might be the correct way to start improving on doing all these things in the EU? It is necessary to understand that it does not need to be, and frankly can’t be, a big-bang change, but it can be done in small steps if necessary.

Dumping datasets on some portals incidentally is not going to solve this. Standardizing the way to describe datasets for instance through the DCAT standard is a step forward but often more is needed as it does not describe the knowledge that is represented by the data in an interoperable way. Also, when knowledge is perpetually changed and improved, datasets need to be real time data sources connected to a single source of the knowledge. This should probably bring more people back to have more focus on working with the aforementioned W3 open standards that make up the idea of the semantic web along with newer developments around verifiable linked data. Luckily, this is taking place already but only in the limited scope and frame of data sharing through EU dataspaces and EDI wallets as well as around YourEurope as a high over service directory.

In general, within the scope of rules as code, improving this could start with

- identify all public knowledge written down as is, publish it if possible9, and make it referenceable. Not only laws but all agreements at any level. No private knowledge that contains personal information of course.

- analyze and integrate knowledge by making cross-references or improvements such as where it is easy to do also through standardization so 100 definitions of a certain concept are integrated into a single definition that works in all contexts, or just live with the multiple definitions for the different contexts but with added cross referencing relations where this makes sense.

- Thirdly, formalize, codify, and deliberate knowledge in a way it can be operationalized in an ethical way by design, so it supports the very ongoing decision-making processes the agreements that form a service in question are to be corrected, altered, and improved in. Often this is part of agreements already anyway.

This means there need to be standards to agree on that:

- How to reference (and find) specific parts of any agreement. It seems obvious the ELI standard needs to be selected for this and improved where necessary, so it is far easier to be used effectively for any kind of agreement. But there is probably a lot more that can be offered through free open services and tooling around this standard, so it is far easier to be used effectively.

- How services are identified and described at a meta level focused on operationalization referring to formalized rules and agreements that define how the service operationalizes a standard set of capabilities to add value, in what channels, and so on. So, in the end, it gets traceable how agreements were made but also where it was agreed on how to correct, change, and improve them and so on. This includes references to formalized rules but also explanatory content and so on. It seems obvious the CPSV-AP and CCCEV standards need to be selected for this initially and improved where necessary. Also, we need standards for publishing these, which will form services around this, so it is far easier to use effectively. Within the Dutch PRA project, to prevent a spaghetti of rules, it was suggested that any agreement source is to be kept as a structuring unit when elaborating on it into services and formalized rules making them context dependent modules. This can also determine better ways to reference these things. This means, when a higher level law gets an update, it automatically can result in updated services or at least a specific detailed to-do list on how to change/improve to comply with the change of the higher level agreement. Also, it seems the ISO 42010:2022 seems usable for structuring knowledge around any agreement, including explanatory knowledge for specific kinds of stakeholders in the agreement as “Entity of Interest”. This hooks into ICT architecture foundations in general.

- How knowledge is to be integrated around identified services and the concepts within the knowledge are cross referenced in a manageable way, for which it is obvious to initially select how semantic web standards propose to do these things. In the Netherlands, a so-called NLSBB standard has been introduced which heavily relies on and wraps the SKOS(-LEX) standard. But there is probably a lot more that can be offered through free open standards, services, and tooling around such agreements, so it is far easier to be used effectively.

- How formalized rules that represent the agreement a service operation is to be decided upon, used but also corrected, changed, and improved and also get published in a way people (experts) will know about their existence, get them explained and so on. This might also involve improvement of the CPSV-AP but there is probably a lot more that can be offered through free open services and tooling around these services, so it is far easier to be used effectively. Most importantly is that rules need interoperability. The RIF standard does not really seem to support this enough. But that is where it can start. One could select Answer Set Programming (some RIF-CASPD evolution) as a standard, such that any RaC solution would want to be able to export, import rules expressed in this way, but it needs to get augmented with additional information like standardized explanatory content (at least for experts to begin with) too.

Let's start properly doing all this. Though not by ignoring what is out there already and reinventing wheels or limiting choice where it is not necessary, but by connecting the dots and getting everyone to agree on a similar mindset that overviews the whole and identifies the purpose and by promoting collaboration around this mindset, which perhaps, in the end, is what the EIRA will need to get to represent. As an example and attempt to get things going it is our intention to craft an experimental service description around article 7a of the Dutch National Old Age Pension Act that aligns with the CPSV-AP and uses RIF-CASPD to describe the rules in an interoperable answer set programming way in relation to several known other representations that were developed by other parties. We will publish this by the end of 2024 in our gitlab18, so stay tuned.

Sources:

[1] For instance, in France, OpenFisca (https://openfisca.org) is being used to provide a testing tool to advise citizens on over 1000 aids in 5 minutes (https://mes-aides.1jeune1solution.beta.gouv.fr/simulation/individu/demandeur/date_naissance?utm_source=1jeune1solution). In the Netherlands, a project experimented with a “Personal Regulation Assistant” app as a delivery channel for all public services.

[2] like Euromod (https://euromod-web.jrc.ec.europa.eu/) and OpenFisca (https://openfisca.org) focusing on modeling tax benefit systems of nations for the purpose of monitoring or to do research resulting in advice for decision makers

[3] https://regels.overheid.nl/cms/uploads/Ra_C_assessment_definite_version_d4910c102c.pdf

[4] Mirzadeh, I., Alizadeh, K., Shahrokhi, H., Tuzel, O., Bengio, S., & Farajtabar, M. (2024). Gsm-symbolic: Understanding the limitations of mathematical reasoning in large language models. arXiv preprint arXiv:2410.05229. https://arxiv.org/pdf/2410.05229

[5] Meng Weng Wong, Rules as code: Seven levels of digitisation, Singapore University of Management, 2020

[6] https://usm-portal.com/100-iso-and-iec-definitions-of-service/?lang=en

[7] Sandra Wachter, Brent Mittelstadt, Chris Russell,

Why fairness cannot be automated: Bridging the gap between EU non-discrimination law and AI, Computer Law & Security Review, Volume 41, 2021, 105567, ISSN 0267-3649, https://doi.org/10.1016/j.clsr.2021.105567.

[8] Research on the PRA project identified value conflicts between roughly Accessibility, Inclusiveness, Explainability, Transparency on the one hand and Feasibility, Standardisation, Compliance (Legal validity) and Privacy on the other hand. I.F. Hoppe, A.S. Sattlegger, Nitesh Bharosa, H.G. van der Voort. 2024 Managing Value Conflicts in Digital Proactive Public Services. http://resolver.tudelft.nl/uuid:3e31e374-20f9-46a5-bb6d-bf6bd19b1c29

[9] Hans de Bruijn, Martijn Warnier, Marijn Janssen, The perils and pitfalls of explainable AI: Strategies for explaining algorithmic decision-making, Government Information Quarterly, Volume 39, Issue 2, 2022, 101666, ISSN 0740-624X, https://doi.org/10.1016/j.giq.2021.101666. (https://www.sciencedirect.com/science/article/pii/S0740624X21001027)

[10] Like as explained in Nexus by Yuval Harari

[11] Wachter, Sandra and Mittelstadt, Brent and Russell, Chris, Do large language models have a legal duty to tell the truth? (January 31, 2024). Royal Society Open Science, Available at SSRN: https://ssrn.com/abstract=4771884 or http://dx.doi.org/10.2139/ssrn.4771884 - while this paper is about LLM’s it contains a line of thought and insights that also applies to RaC.

[12] Fraser, Hamish, and Tom Barraclough. "Governing digital legal systems: insights on artificial intelligence and rules as code." (2024). https://apo.org.au/node/327339

[13] In the Unified Service Management (USM) method, there are only five main processes that an operationalised service (organisation) will need to support in relation to value the service adds: agree (contract management, decision making), operate (operations management), change (change management), recover (incident management) and improve (risk management). The USM method has been used by many organisations and is also (being) integrated within the NORA (Dutch Governmental Reference Architecture). See https://usmwiki.com/index.php/Process_model

[14] This often does require attestation revocation verification, and this can and should be performed in a way that is not linkable way; the issuing party does not get to know the usage of the issued information. The current ARF (v1.4) does not prescribe how to do this other than that the signed attestation may provide a URL that refers to a revocation list This seems one of the reasons for a delay in the planning of rolling out EuDI Wallets into production. https://epicenter.works/en/content/finally-a-no-to-overreaching-id-systems

[15] https://regels.overheid.nl/docs/assets/files/AOW-Leeftijd-vindplaatsten-in-wetgeving-6f21171f35f6fb99bf580601f64a5c51.pdf

[16] https://www.svb.nl/nl/aow/aow-leeftijd/uw-aow-leeftijd

[17] to find it on the YourEurope platform, search for “AOW pension age” in https://europa.eu/youreurope/search-results/index_en.htm?countryCode=NL&categories=B9;U2;W2;X2 which will lead you to: www.svb.nl/en/aow-pension/aow-pension-age/your-aow-pension-age

[18] We are going to publish about this in our GitLab at the end of 2024: https://gitlab.com/digicampus/gt4a/wp2-2024

[19] As explained in this paper on anti-corruption mechanisms, which seems also applicable more generally to public services where there is also a group of people with fear for government services: https://images.transparencycdn.org/images/2019_Toolkit_EngagingCitizensAnti-CorruptionMechanisms_English.pdf

[20] Dutch Governmental Reference Architecture (NORA, noraonline.nl) that has been an example for the European Interoperability Reference Architecture initially, yet while supporting parts of the views expressed in this article there seems so much to improve still. This is out of scope of this article. But yes, these are to be seen as agreements too and actually the NORA has become legally binding to some extent.

[21] As seems also being expressed within the recent report EU strategic competitiveness by M. Draghi https://commission.europa.eu/topics/strengthening-european-competitiveness/eu-competitiveness-looking-ahead_en