As part of the Test Bed’s commitment to open-source and transparency, a source code quality dashboard has been published in support of its open-source validators.

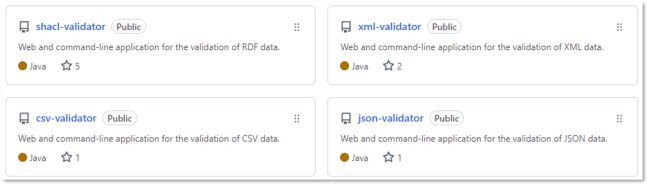

The Test Bed’s validators for XML, RDF, JSON and CSV content are popular components providing data validation and quality assurance either as standalone services or as part of complete conformance testing scenarios. Their setup is configuration-driven, with several deployment options, ranging from use “as-a-service” to flexible on-premise installations.

Validators are set up via configuration meaning that users don’t need to make extensions at source-code level to start using them. All core components are published as Docker images on the public Docker Hub, from where they can be downloaded (“pulled” in Docker terms) and reused as-is. Nonetheless the source code for the validators’ components is published by the Test Bed team as open-source repositories on GitHub to increase transparency, and foster discussions and the collection of feedback.

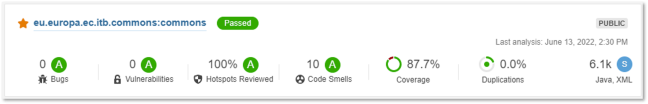

To further extend the validators’ transparency, the Test Bed team has now made available a quality dashboard for their source code. The dashboard is realised through the popular SonarCloud service and maintains, per validator, source code quality results including numbers of bugs, vulnerabilities, bad practices as well as unit test success and test coverage reports.

These metrics serve the validators’ development process, as part of which quality reports are used to improve ongoing development work, and to support published releases. Apart from this they also serve as a public quality stamp, providing to users a good measure of the validators’ quality and maintenance effort.

Maintaining and publishing source code quality reports is the latest addition to the Test Bed’s presence on GitHub. They complement the Test Bed’s existing open-source practices and use of available tooling. Specifically:

- The repositories’ master branches reflect the latest published builds on the Docker Hub.

- Quality metrics are published and link to the detailed quality dashboard.

- GitHub Actions are used for automatic integration and quality builds upon pushed changes.

- Webhooks are configured to redeploy managed validators with the latest changes.

- Releases are made to publish milestones and notify subscribed users.

- The repositories’ issue management is used for feedback collection and discussions.

As mentioned previously, users need not use the validator’s published source code or releases directly, as in most cases new validators can be defined via configuration and hosted by the Test Bed. Details on how to do this are provided in the validators’ guides (see for XML, RDF, JSON and CSV cases) that include step-by-step instructions on how to set up a new validator instance, be it managed by yourself or by the Test Bed on your behalf.

If you are new to the Test Bed, general details can be found in its Joinup space with its value proposition being a good starting point for newcomers. Finally, if you are interested in receiving the Test Bed’s news, apart from subscribing to notifications, you may also follow the Interoperable Europe’s social media channels (Twitter, LinkedIn) for updates on the Test Bed and other interoperability solutions.

Referenced solution