- Introduction

Open Standards for Linked Organisations - OSLO - is a succession of the OSLO initiative that was initiated in 2012 by V-ICT-OR (Flemish ICT Organisation) and laid the basis for an open semantic information standard. In 2016, the Flemish Agency of Information took the initiative to transform OSLO from Open Standards for Local Administrations to Open Standards for Linked Organisations and shift the governance to the Steering Committee Flemish Information and ICT policy (Vlaams stuurorgaan Informatie en ICT-beleid).

As suggested by the name, the aim of OSLO is to achieve standardisation for the exchange of data in a broad sense by facilitating and recognising semantic and technical data standards.

The objectives of OSLO are to:

- Facilitate semantic and technical standardisation through an open process;

- Maintain existing standards;

- Ensure rules and governance are respected;

- Provide a publication platform ;

- Promote standards; and

- Provide training and support for the adoption of data standards.

The main philosophy behind the OSLO-approach is to ensure the reusability of existing international standards (W3C, EU ISA Core Vocabularies, INSPIRE...) and to seek the involvement of a multitude of different stakeholders such as government, industry and academia. The latter is often referred to as the Triple Helix approach.

Currently, OSLO contains over 18 domain models consisting of 1000+ definitions made by more than 262 contributors from 107 organisations. The data standards are listed on https://data.vlaanderen.be/ns. Additional information can also be found in the following presentation given by Raf Buyle at the SEMIC 2018 conference.

- Process and methodology for the development and recognition of a data standard

Based on the best practices of amongst others W3C, ISA & OpenStand, a methodology was created for registering, developing, changing or the phasing out of a data standard.

The process and methodology are based on the principles of openness and transparency, providing high participation of stakeholders and ensuring stability, quality and usability. The methodology also provides an adequate level of flexibility for controlled changes and maintenance of the agreements and standards in order to cope with the ever-changing environment that surrounds the data standards.

The methodology consists of 5 processes that are fully documented and communicated to all stakeholders. The figure above illustrates how the different processes interact with one another. These processes are:

- Registration of a data standard in development

- Development of the data specifications

- Publication and technical anchoring of the data standard

- Change management

- Phasing out of a standard

- Tools

Publication - Toolchain

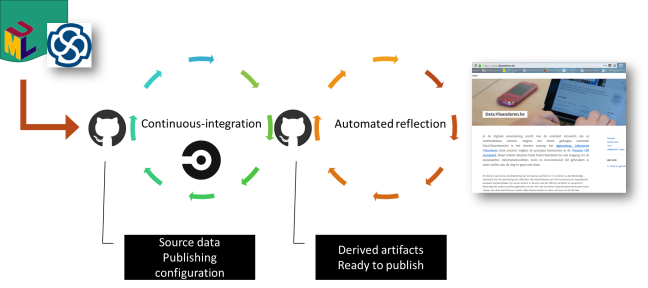

During the course of OSLO², a number of tools have been developed in order to minimize rollout and maintenance effort of any change to the system. One of the main tools, the toolchain, ensures that the data standards are published automatically on the data.vlaanderen website.

The toolchain (as illustrated by the figure below) is a consecutive chain of tools that automatically generates artefacts that are ready for publication on the data.vlaanderen website. The source code is maintained on Github.

The main steps of the toolchain are:

- Data standards are modelled in UML

- EA2RDF converts Enterprise Architect UML diagrams to RDF. Data in this format is easy to process.

- Specgenerator generates artefacts such as the vocabulary in RDF & HTML format, the application profile in HTML, shacl templates & JSON-ld context from the output of the EA2RDF tool.

Publication - Register of data standards

data.vlaanderen provides a register of all data standards that have been published. OSLO² distinguishes three different categories of standards, namely:

- Recognised standards;

- Candidate standards; and

- Standards in development

Each standard has a personal webpage with the context of the standard in question. Additional background information is also provided such as the project charter, links to presentations of the thematic working groups & webcasts.

Validation - OSLO² Validator

A validator tool was developed in order to validate the RDF graphs against the Application Profiles available within the OSLO² context. The validation is based on the SHACL Shapes Constraint Language, a language for validating RDF graphs against a set of conditions. SHACL is a W3C recommendation since 20 July 2017.

Persistent URI’s - Flemish URI standard for data

This URI standard describes the rules to which the URI’s (Unique Resource Identifier) attributed by the Flemish government must adhere. This allows the surrounding systems to evolve while URI’s stay the same. All persistent URIs should be created according to the following pattern:

http(s)://{domain}/{type}/{concept}(/{reference})

where:

- Domain is a subset formed by the hostname. This should be a neutral, organisation-independent and timeless name.

- Type describes the nature of the underlying resource. The following classification is proposed to describe the type-component of the URI: id (identification), doc (document) and ns (namespace). The main goal is to separate the representation of the object on the web from the concept of the object in reality.

- Concept describes the category of the resource. The resource-classification gets its meaning within the concept of a domain and should be interpreted as follows: {resource} is (a) {concept} – {type}.

- Reference references a certain instance of a resource and is formed according to the following pattern: {reference-basis}(/{reference-version}).

More information about the URI standard can be found here.

API’s

Although a big portion of OSLO’s scope revolves around semantic interoperability, additional efforts are also aimed at increasing the technical interoperability via the Generic Hypermedia API specifications.

The scope of these specifications is to describe the methods and operations used to query or change data within the APIs. Several building blocks have been developed, namely:

- Pagination;

- CRUD operations; and

- Language selection/discovery

More information about the API specification can be found on the Github page.