Quick reference

Use the following links to jump to a specific section:

- How does the Test Bed work?

- When to use (and not to use) the Test Bed

- Standalone validators

- Technical capabilities (technology-specific and specification/domain-specific)

- Deployment models

- Test Bed releases and receiving release notifications

- Test Bed operational window (for DIGIT-hosted services)

- Licencing and cost

How does the Test Bed work?

The Test Bed is a complete platform consisting of both software and hardware components with the purpose of facilitating testing. The particular focus in this case is conformance and interoperability testing, ensuring that tested systems conform to a specification’s requirements and can interoperate consistently with conformant peer systems.

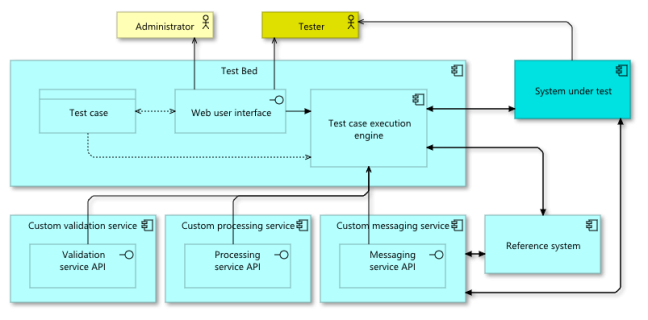

The following diagram illustrates the high-level operation of the Test Bed, in which the highlighted elements represent the system being tested and its tester:

Testing is organised in test suites, collections of one or more test cases, that define the scenarios to test for. Such test cases link to specifications and are assigned to systems under test once these are configured to claim conformance to them. The management of specifications and their test cases, organisations and their users, as well as the execution of tests and subsequent monitoring and reporting, take place through the Test Bed’s web user interface.

Test cases are authored in the GITB Test Description Language (TDL), an XML-based language that allows the definition of the steps to successfully complete and the involved actors, one of which is always realised by the system under test with others either being simulated or realised by other actual systems. Test steps can vary from validation and messaging to arbitrary processing, manipulating and evaluating the test session’s state, either per step or by checking the overall session’s context. A good example of the latter is ensuring conversational consistency by validating that a received message corresponds to an earlier request.

Each step’s operation takes place using the Test Bed’s built-in capabilities but, if needed can delegate to external components. This is where the Test Bed shines, in its ability to include custom and independent processing, messaging and validation extensions to address missing capabilities or domain-specific needs, by means of exchanges over a common web service API (the GITB service APIs). In using such extensions, the Test Bed acts as an orchestrator of built-in and externally provided capabilities that make it flexible enough to accommodate most conformance testing needs.

When to use (and not to use) the Test Bed

The Test Bed’s focus is on conformance testing, specifically testing IT systems against semantic and technical specifications. The following table summarises when the Test Bed can offer a good solution but also cases when other solutions would be better suited.

| Use the Test Bed for: | Do not use the Test Bed for: |

|---|---|

|

|

In short, the Test Bed is very flexible but nonetheless best suited to test interactions between IT systems. Other aspects such as performance could potentially be addressed, but this should be more as an addition to the core conformance testing setup and not the main goal.

Standalone validators

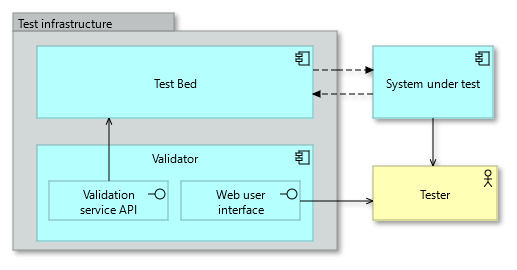

Not all projects require a full conformance testing platform to realise their testing needs. It if often the case that what is most needed is a means of validating content as a tool offered to the community or as a validation building block of a broader solution.

The Test Bed addresses this need by means of its standalone validation services. These are services operated independently from the Test Bed that focus specifically on receiving content via various channels and validating it against specifications to produce reports. Input can typically be via web user interface, SOAP and REST web services or even via command line for offline use. All provided input and resulting reports are never stored and access is fully anonymous. This allows validators to be used in any context and to be deployed and scaled according to a project’s needs.

Certain validators for commonly used content types are supported by the Test Bed by providing a generic reusable core that can be easily configured for use in specific instances. These allow users to:

- Create new instances by means of simple configuration and customise them as needed.

- Benefit from hosting on Test Bed resources and automatic service updates upon change.

The content types for which such extended support is available are:

- XML: For validation of XML-based specifications using XML Schema and Schematron (see overview, detailed guide, Docker image and source code).

- RDF: For validation of RDF-based specifications using SHACL shapes (see overview, detailed guide, Docker image and source code).

- JSON: For validation of JSON-based specifications using JSON Schema (see overview, detailed guide, Docker image and source code).

- CSV: For validation of CSV data using Table Schema specifications (see overview, detailed guide, Docker image and source code).

The following screenshot presents a validator for electronic invoices, reusing the Test Bed’s generic XML validation core via simple configuration:

All validators, regardless of whether or not they benefit from the discussed extended support, implement the standard validation service API. This is a SOAP web service API that allows them to be used by the Test Bed during tests as the implementation to handle the validation of content. This is an important point as it allows validators to be offered both as standalone validation tools but also to be used as conformance testing building blocks as part of broader scenario-based conformance tests (e.g. validate messages received during exchanges).

The validators currently offered by the Test Bed are listed in the next section.

Technical capabilities

The following tables list the Test Bed’s technical capabilities in terms of specifications, that can be reused when implementing a new conformance testing setup. The listing is split in:

- Technology-specific capabilities that focus on a given technology regardless of policy or business domain.

- Specification or domain-specific capabilities that would typically be meaningful for a given specification or within a given policy domain.

In each case services are further classified based on their purpose, specifically as messaging services, used to send and receive content to and from the Test Bed, and validation services, used for content validation.

Note that not finding a capability listed here is never a blocking point. It is very simple, often also preferred by users, to cover missing capabilities by defining new extension services using the Test Bed’s template services.

Technology-specific

| Specification | Description | Public API | |

|---|---|---|---|

| Messaging services | eDelivery AS4 | eDelivery AS4 integration via a Domibus access point. | Docker |

| HTTP(S) | Sending and receiving content over HTTP(S). | - | |

| REST | Consuming and serving REST services. | - | |

| SOAP | Consuming and serving SOAP web services. | - | |

| TCP | Sending and receiving of content as TCP streams. | - | |

| UDP | Sending and receiving of content as UDP packets. | - | |

| Validation services | ASiC | Validation of Associated Signature Containers (ASiC). | SOAP |

| CSV¹ | Validation of CSV data using Table Schema. See the Test Bed’s CSV validation guide for detailed information. | UI, REST, SOAP, CLI, Docker | |

| GITB TDL | Validation of GITB TDL test suites. | UI, SOAP | |

| GraphQL | Validation of GraphQL queries against GraphQL schemas. | SOAP, REST | |

| JSON¹ | Validation of JSON using JSON schema. See the Test Bed’s JSON validation guide for detailed information. | UI, REST, SOAP, CLI, Docker | |

| Message digests | Validation of text digests using various algorithms. | SOAP | |

| SBD | Validation of Simple Business Documents. | UI, REST, SOAP | |

| SBDH | Validation of Simple Business Document Headers. | UI, REST, SOAP | |

| SHACL | Validation of SHACL shapes. | UI, SOAP, REST, CLI | |

| RDF¹ | Validation of RDF content using SHACL shapes. See the Test Bed’s RDF validation guide for detailed information. | UI, SOAP, REST, CLI, Docker | |

| Table Schema | Validation of Table Schema instances (used to formalise CSV and other tabular data specifications). | UI, REST, SOAP | |

| XML¹ | Validation of XML using XML Schema, Schematron, XPath, XMLUnit. See the Test Bed’s XML validation guide for detailed information. | UI, REST, SOAP, CLI, Docker | |

| XML digital signatures | Validation of XML signatures in arbitrary XML content. | SOAP |

¹ Extended support available for specific instances via configuration-driven setup and automated service updates.

In addition to the above supported specifications, the Test Bed also includes numerous low-level built-in capabilities for the arbitrary manipulation and validation of content (e.g. pattern matching via regular expressions).

Specification or domain-specific

| Specification | Description | Public API | |

|---|---|---|---|

| Messaging services | UN/FLUX | Integration with the UN/FLUX network. | - |

| Validation services | BRegDCAT-AP | Validation of RDF content against the BRegDCAT-AP (Specification of Registry of Registries) rules. | UI, SOAP, REST |

| CII | Validation of electronic invoices as Cross Industry Invoice documents. | UI, REST, SOAP, CLI | |

| CloudEvents | Validation of JSON objects against the CloudEvents specification (supporting also custom schemas for project-specific rules). | UI, REST, SOAP | |

| CPSV-AP | Validation of RDF content against the CPSV-AP (Core Public Service Vocabulary – Application Profile) rules. | UI, SOAP, REST | |

| DCAT-AP | Validation of RDF content against DCAT-AP (Data Catalogue Vocabulary – Application Profile) rules. | UI, SOAP, REST | |

| DCAT-AP.de | Validation of RDF content against the German DCAT-AP extensions rules. | UI, SOAP, REST, CLI | |

| eGovERA | Validation of ArchiMate models (solutions and eGovERA models) against eGovERA modelling guidelines. | UI, REST, SOAP | |

| EIRA | Validation of ArchiMate models (solutions and Solution Architecture Templates – SATs) against the European Interoperability Reference Architecture and target SATs. | UI, REST, SOAP | |

| ELAP | Validation of ArchiMate models (solutions and Solution Architecture Templates – SATs) against the EIRA Library of Architecture Principles. | UI, REST, SOAP | |

| ELRD | Validation of European Land Registry Document instances. | UI, REST, SOAP, CLI | |

| ePO | Validation of RDF content for the e-Procurement Ontology. | UI, SOAP, REST | |

| ESPD | Validation of European Single Procurement Document instances. | UI, REST, SOAP | |

| GeoJSON | Validation of JSON content against the GeoJSON specification. | UI, REST, SOAP | |

| Kohesio | Validation of CSV data collection files for the Kohesio portal. | UI, REST, SOAP, CLI, Docker | |

| REM | Validation of Registered Electronic Mail (REM) evidences. | UI, REST, SOAP | |

| SEMIC Style Guide (OWL/SHACL) | Validation of OWL vocabularies and SHACL shapes against the guidelines of the SEMIC Style Guide. | UI, SOAP, REST | |

| UBL 2.1 Credit Notes | Validation of electronic credit notes as UBL 2.1 documents. | UI, REST, SOAP, CLI | |

| UBL 2.1 Invoices | Validation of electronic invoices as UBL 2.1 documents. | UI, REST, SOAP, CLI | |

| UBL 2.2 eProcurement | Validation of procurement process UBL documents as defined in the PEPPOL pre-award profiles (P001, P002 and P003). | UI, REST, SOAP | |

| UML to OWL | Validation of UML models defined in XMI format based on the SEMIC style guide, to generate an OWL ontology. | UI, REST, SOAP |

Note that the above listing of validators is not exhaustive, but presents the cases where a public API is offered and there is good reuse potential.

Deployment models

The Test Bed offers a flexible deployment model to suit most project needs. Projects using the Test Bed can choose to:

- Reuse the shared instance operated by DIGIT. This is a cloud-based environment shared with other users (i.e. multitenant) where each project is defined as a “community”. Communities can be set as public but are by default private to their members.

- Install an on-premise instance. Installation and update of such Test Bed instances is fully streamlined and allows projects to have full control of their deployment and use.

The simplest approach is typically to use the shared instance as this is free and removes operational concerns. Opting for an on-premise instance could be preferable however for projects that have access restrictions, specific non-functional needs or that prefer to have full operational control.

The Test Bed also supports the simple on-premise set up of sandbox instances by a project’s users. Project administrators can define a complete testing setup and provide this to their users to set up test environments for local testing. An example where this could be interesting is when an EU-wide project offers National administrations such preconfigured sandboxes for local testing and experimentation before engaging in formal acceptance tests in a shared environment.

Exploring the available deployment options and defining the best approach for a specific project is one of the first points addressed as part its onboarding process.

Test Bed releases

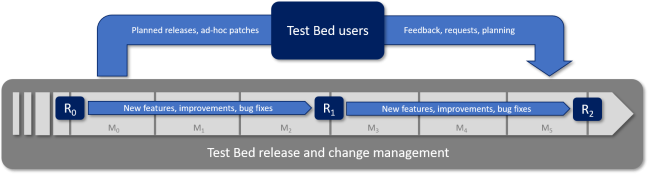

The Test Bed team is committed to making frequent releases to continuously improve the capabilities of the core Test Bed software. Specifically:

- Planned releases are made at approximately three-month intervals.

- Unplanned minor updates can also be made if merited by specific blocking issues.

The planning of releases could also exceptionally be adapted to match planning constraints of Test Bed’s users (e.g. a project’s own release milestones). Once a new release is available, the environments operated directly by the Test Bed team are updated and users operating on-premise instances are notified. The update process for on-premise instances is streamlined, with new releases always guaranteed to be backwards compatible with existing data and test suites.

It is important to point out that the Test Bed team always prioritises user feedback for its change management. To propose a new issue or report a problem send an email to the Test Bed team at DIGIT-ITB@ec.europa.eu. If you are a GitHub user you can also post an issue on the Test Bed repositories' public issue trackers (for the core Test Bed software, or the XML, RDF, JSON and CSV validator).

Being notified of new releases

Whenever a new release is made, the Test Bed team will notify users that have requested included features with a brief email to highlighting their availability. Apart from such specific updates, you can also be notified automatically for new releases by:

- Clicking the "Subscribe to this solution link".

- Managing your profile's subscriptions to receive email notifications.

As a complement or alternative to using the Interoperable Europe Portal, you may also follow the Test Bed's GitHub repositories and select to be notified of new releases. You can do this for:

- The GITB Test Bed software (see its GitHub repository).

- Individual validators (see the GitHub repository for XML, RDF, JSON and CSV).

Test Bed operational hours

All Test Bed services hosted by DIGIT are available during the Test Bed's operational hours. These are defined as follows:

- The GITB Test Bed platform is online from Monday through Friday, between 05h00 and 20h00 CET.

- Hosted validators and documentation resources are online every day between 05h00 and 01h00 (of the next day) CET.

Note that published resources such as Docker images are not subject to this operational window and remain always available. In case your needs are not covered by the provided window and plan to make use of Test Bed services hosted by DIGIT please contact the Test Bed team at DIGIT-ITB@ec.europa.eu.

Licencing and cost

The Test Bed and validator components are published under the European Union Public Licence. Specifically:

- GITB Test Bed software: The GITB Test Bed software is published under a customised version of EUPL 1.1. This allows free usage but restricts liability and trademark use of CEN as the initial publishing organisation.

- Validators: All reusable validator components for XML, RDF, JSON and CSV validation are published under EUPL version 1.2.

- Reusable components: Apart from the GITB Test Bed software (see above), all other reusable components shared by the Test Bed in the Docker Hub are also published under EUPL version 1.2. This also includes the gitb-types library that encapsulates the GITB artefacts and types that most downstream projects would define as a dependency.

Usage of all components produced by the Test Bed is free for all users when operated on the users' own infrastructure.

When using the shared services hosted by DIGIT, both regarding the GITB Test Bed platform and validators, usage is free for all on-boarded users. In case specific users require dedicated infrastructure resources these may be subject to a cost-sharing agreement to be established as part of the on-boarding process.